Course Design – Part Two – The TD Process

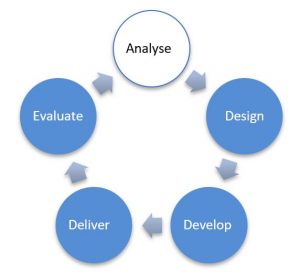

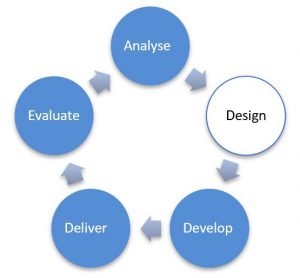

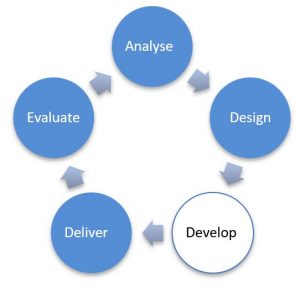

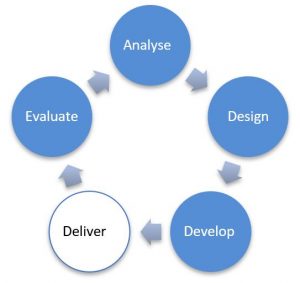

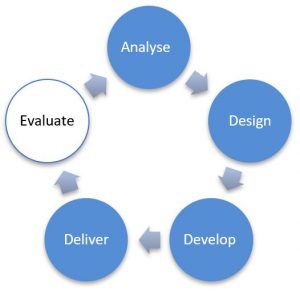

In part two of this article series on course design we take a look at the training development process of Analyse, Design, Develop, Deliver and Evaluate.

The training development process

The first time I was introduced to a systems approach to training design was about 1997. I had just completed a couple of years training recruits at the 1st Recruit Training Battalion at Kapooka and had absolutely decided that whatever my future involves it was going to involve training. The Handbook of the Army Training System had just been released in a new edition in 1997 and it described the phases of the Army Training System as Analyse, Design, Develop, Conduct and Validate. Of course, these phases of the training system were originally adapted from the US Army / Florida State University instructional design model developed back in 1975 (Wikipedia) which included the phases of Analysis, Design, Development, Implementation, Evaluation. If you want to dive into this in greater detail, just Google “ADDIE Model” and you will have it all before you! I personally prefer ‘Deliver’ over Conduct or Implement and ‘Evaluate’ in preference to Validate. That’s just my personal preference as I think it better reflects our contemporary arrangements in VET today.

In a nutshell, it goes something like this:

- Analyse. Conduct an analysis of the environment for which training is being developed and decide on the approach to be taken in the course design to address the skilling need. By ‘environment’ I mean determine things like, what does the workplace need and expect, what does the training package require, who is the target learner, what is the preferred enrollment model, are there any restrictions or limitations to be considered, what are the financial considerations, is there a preferred or required duration, what facilities and resources are available, who will deliver the training, et cetera. At the end of the analysis phase, you will have considered everything and will have selected a preferred design approach for the planned course. I should also point out that, good analysis may identify where a training solution is not the prefered or only option. The analysis may show that an acceptible workplace capability could equaly be achieved by other solutions such as introducing new equipment or work aids that can be augmented into the workplace without the need to train an entite cohort of people. The thing about the analyse phase is to collect and analyse the information and keep your mind open to all of the options. Training is usually the “go to” which I understand but dont simply assume it is the only solution. If the target learner is already employed performing the skills in industry, maybe a suitable options is to provide targeted gap training coupled with an assessment only pathway which results in the achivement of the training product in a more efficient way.

- Design. The design phase is where you start to play with units of competency to determine the sequence and structure of the course, the units weighting in the course, the learning and assessment requirements. This is where we unpack each unit of competency to identify what the learner needs to learn both in theory and practice and determine what are the required assessment tasks. You will make decisions about delivery modes and assessment methods and piece these learning and assessment activities together into a course program over the target duration. With the wide availability of commercial course packages, much of this work may already be completed but, you will still need to unpack all those materials to determine what the learning and assessment activities are and determine how these will be programmed over the course duration. At the end of the design phase, you will basically have a draft Training and Assessment Strategy which includes a detailed course program showing the learning and assessment activities and how these are sequenced and structured over the course duration. You basically have a map to head into the development phase.

- Develop. The development phase is where you start the development of those learning resources and assessment tools according to your developed course program and the agreed modes of delivery. If you are using a commercial course package, this is where you get to customise those materials according to your plan. They never come ready to go or compliant, so this is the time to pull them apart and piece then back together so that if you claimed that students will be undertaking a certain learning mode, you have the learning resources to support this. This also applies to the assessment. Maybe the knowledge assessment is too thin or there are not sufficient observation tools and benchmarking to support the assessment. Maybe the assessment instructions are vague or the tasks are not valid. This is the time to work your way through all these materials and customise them. Try and stay aligned to the original course design, but if you find something along the way that needs to inform or change the design, speak up. It is better to make these changes now that halfway through a delivery. At the end of the develop phase, you will have an entire course curriculum complete with a final training and assessment strategy and all the learning resources and assessment tools ready for the delivery.

- Deliver. The deliver phase (also referred to as Conduct or Implement) involves the enrolment of learners and the commencement of the training and assessment according to the developed course program and curriculum. You may decide to conduct this first course as a pilot course where heavier than usual quality and evaluation arrangements are put in place to monitor how the training and assessment is being received. A few questions that are relevant include, is the time allocated sufficient, did learners get enough time to practice before being assessed, was the expectation of ‘self-paced learning’ unrealistic? The delivery phase is not just about delivering the training and assessment. It also about evaluating how the training performed based on the experience of those involved. People can get confused about this, noting that evaluate is the next phase. Having a basic understanding of Kirkpatrick’s levels of learning evaluation (Wikipedia) will help explain this approach. In the delivery phase we are evaluating the experience of participants (level 1) including both learners and trainers on how the training was to deliver and receive. We also evaluate the acquisition of the skills and knowledge by learners through their engagement in the training (level 2). Did the training provide adequate preparation for assessment, what is the level of learner confidence to perform these tasks in the workplace? At the end of the pilot course, you produce a pilot course evaluation report that identifies all of the recommendations and opportunities for improvement and you feed this into your ongoing course development for future delivery.

- Evaluate. The evaluation phase (also referred to as the validation phase) is conducted usually a bit down the track (maybe 12-24 months) once the learners’ have entered the workplace and have had a chance to apply their training inthe workplace environment. The evaluation should look at two specific questions again drawing on Kirkpatrick’s levels of learning evaluation. First question, did the training adequately prepare the learner to perform the required tasks in the workplace (level 3). Were there gaps (missed training)? Was there anything they were not prepared for (under training)? Were there any skills that were not required in the workplace (over training)? Ok, there were lots of questions in that first question, but I think you get my point. The second question, did the workplace get the personnel capability they needed (level 4)? Do you recall that very first thing we considered right back in the analyse phase “what does the workplace need and expect”? In this part of the evaluation, we need to determine if industry were satisfied with the skills and knowledge the worker entered their workplace with. Were there any gaps from their perspective? How have any relevant KPIs performed that directly relate to worker skills (think safety, work remediation, waste, productivity, customer satisfaction, etc). Were the skills easily transferred to the workplace context or are there areas the RTO could improve to make the training more relevant?

I love this stuff. The reality is, we just do not do this anymore in either the public or private RTO sector. There may be some rare instances of highly motivated trainers and teachers following up with industry, but overall,,, not happening. The enterprise RTO world are much better at this for obvious reasons. The enterprise RTO is usually a business unit within the organisation and the effectiveness of training is directly measured by business productivity and worker safety. Way to go! I made a suggestion many years ago that we should link subsidised training payments for RTOs to the actual outcomes measured in the workplace and not purely on learner completions. I know, it would require a dramatic change to the current business as usual, but wow, what a great way to make sure training is accountable and directly aligned to industry requirements. At the end of the evaluation phase, we feed all our recommendations and opportunities for improvement back into the course analysis and design for future delivery and around we go. That is a very brief explanation of the training development process and of course in this article series we are focusing on Analyse and Design only. In Part Three of this article series on course design, we dive into the Analysis of the course requirements including analysing the target learner, the workplace requirements and the training package requirements.

Course Design – Part Three – The Analysis

Good training,

Joe Newbery

Published: 16th May 2022

Copyright © Newbery Consulting 2021. All rights reserved.